How Good Is a Computer with Sensory Devices?

Watch this video – How AI Robots can see? https://www.youtube.com/shorts/4DjhLfn_MD0

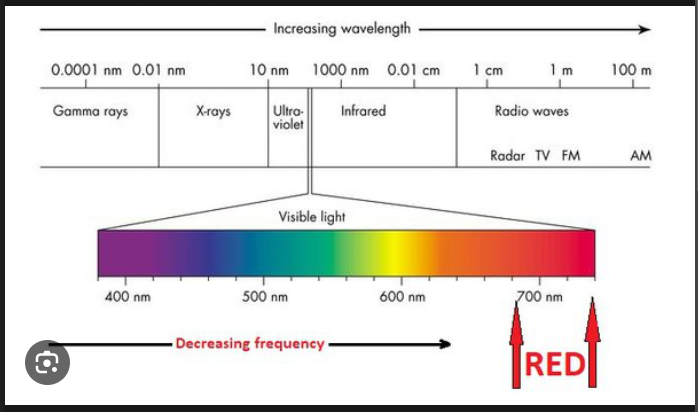

What you saw is not magic, it is scientific. If you remember the process to create the first camera and take a picture of an image, you will remember that all objects (and colors) emit a frequency that a receiver can collect and represents the object emitting those frequencies in another form (print), but even the other form is also an object emitting similar frequencies.

So, the camera receives those frequencies and projects an image, but the ear drum is also a receiver that receives frequencies (sounds) and could project the image sending those frequencies. In living things, the eye (like a camera) has more capabilities (or features) than the ear to receive and project the images (objects) emitting those frequencies.

The robot (computer software codes and memory) could do the same because of billions of software programming. It can project images from those frequencies the sensors received. The software devices have minute sensors like the eye (camera), and speakers (ears), and these devices, with billions of codes, can project the images emitting those frequencies. This is the same scientific approach that was taken to build the first camera. This time, it’s better because of billions of software codes and the memory capacity to hold those codes. If you destroy those sensors (on the robot or living things), the code (or sensory cells) will malfunction and will not hear, see, or project images.

The computer also uses Doppler Radar sensors to measure the distance between the computer and the object emitting the frequencies. This way, they can map the shapes of objects in a room. The radar’s computers measure the phase change of the reflected pulse of energy which then converts that change to a velocity of the object, either toward or from the radar. Information on the movement of objects either toward or away from the radar can be used to estimate the speed of the emitting frequency. This ability to “see” the object emitting that frequency is what enables the computer – robot or living things to see (https://www.weather.gov/mkx/using-radar).

The first device able to reproduce and capture an image was invented in 1816 by Joseph Nicéphore Niépce and was called the heliograph. In 1839, Louis Daguerre created the daguerreotype, which was much closer to the photographic camera concept we know today (https://www.adorama.com/alc/camera-history).

The frequency of a black object is zero. Blackness is the absence of light, so turning on a light of any visible frequency would mean that you don’t have black. White is a mixture of all frequencies; black is no frequency (https://www.quora.com/What-is-the-frequency-of-black).

Colors emitting Frequency Chart